|

|

nothing | commit () |

| | flushes any queued data and commits the transaction

|

| |

| | constructor (SqlUtil::AbstractTable target, hash mapv, *hash opts) |

| | builds the object based on a hash providing field mappings, data constraints, and optionally custom mapping logic More...

|

| |

| | destructor () |

| | throws an exception if there is data pending in the block cache More...

|

| |

| | discard () |

| | discards any buffered batched data; this method should be called after using the batch APIs (queueData()) and an error occurs More...

|

| |

| *hash | flush () |

| | flushes any remaining batched data to the database; this method should always be called before committing the transaction or destroying the object More...

|

| |

|

Qore::SQL::AbstractDatasource | getDatasource () |

| | returns the AbstractDatasource object associated with this object

|

| |

|

*list | getReturning () |

| | returns a list argument for the SqlUtil "returning" option, if applicable

|

| |

|

SqlUtil::AbstractTable | getTable () |

| | returns the underlying SqlUtil::AbstractTable object

|

| |

|

string | getTableName () |

| | returns the table name

|

| |

| hash | insertRow (hash< auto > rec) |

| | inserts or upserts a row into the target table based on a mapped input record; does not commit the transaction More...

|

| |

|

deprecated hash | insertRowNoCommit (hash rec) |

| | Plain alias to insertRow(). Obsolete. Do not use.

|

| |

| TableMapper::InboundTableMapperIterator | iterator (Qore::AbstractIterator i) |

| | returns an iterator for the current object More...

|

| |

|

| logOutput (hash h) |

| | ignore logging from Mapper since we may have to log sequence values; output logged manually in insertRow()

|

| |

| hash | optionKeys () |

| | returns a list of valid constructor options for this class (can be overridden in subclasses) More...

|

| |

| *hash | queueData (hash< auto > rec, *hash< auto > crec) |

| | inserts/upserts a row (or a set of rows, in case a hash of lists is passed) into the block buffer based on a mapped input record; the block buffer is flushed to the DB if the buffer size reaches the limit defined by the "insert_block" option; does not commit the transaction More...

|

| |

| *hash | queueData (Qore::AbstractIterator iter, *hash crec) |

| | inserts/upserts a set of rows (from an iterator that returns hashes as values where each hash value represents an input record) into the block buffer based on a mapped input record; the block buffer is flushed to the DB if the buffer size reaches the limit defined by the "insert_block" option; does not commit the transaction More...

|

| |

| *hash | queueData (list l, *hash crec) |

| | inserts/upserts a set of rows (list of hashes representing input records) into the block buffer based on a mapped input record; the block buffer is flushed to the DB if the buffer size reaches the limit defined by the "insert_block" option; does not commit the transaction More...

|

| |

|

nothing | rollback () |

| | discards any queued data and rolls back the transaction

|

| |

| | setRowCode (*code rowc) |

| | sets a closure or call reference that will be called when data has been sent to the database and all output data is available; must accept a hash argument that represents the data written to the database including any output arguments. This code will be reset, once the transaction is commited. More...

|

| |

| hash< string, bool > | validKeys () |

| | returns a list of valid field keys for this class (can be overridden in subclasses) More...

|

| |

| hash< string, bool > | validTypes () |

| | returns a list of valid field types for this class (can be overridden in subclasses) More...

|

| |

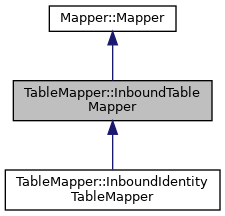

provides an inbound data mapper to a Table target

builds the object based on a hash providing field mappings, data constraints, and optionally custom mapping logic

The target table is also scanned using SqlUtil and column definitions are used to update the target record specification, also if there are any columns with NOT NULL constraints and no default value, mapping, or constant value, then a MAP-ERROR exception is thrown

- Example:

const DbMapper = (

"id": ("sequence": "seq_inventory_example"),

"store_code": "StoreCode",

"product_code": "ProductCode",

"product_desc": "ProductDescription",

"ordered": "Ordered",

"available": "Available",

"in_transit": "InTransit",

"status": ("constant": "01"),

"total": int sub (any x, hash rec) { return rec.Available.toInt() + rec.Ordered.toInt() + rec.InTransit.toInt(); },

);

InboundTableMapper mapper(table, DbMapper);

- Parameters

-

| target | the target table object |

| mapv | a hash providing field mappings; each hash key is the name of the output field; each value is either True (meaning no translations are done; the data is copied 1:1) or a hash describing the mapping; see TableMapper Specification Format for detailed documentation for this option |

| opts | an optional hash of options for the mapper; see Mapper Options for a description of valid mapper options plus the following options specific to this object:

"unstable_input": set this option to True (default False) if the input passed to the mapper is unstable, meaning that different hash keys or a different hash key order can be passed as input data in each call to insertRow(); if this option is set, then insert speed will be reduced by about 33%; when this option is not set, an optimized insert/upsert approach is used which allows for better performance"insert_block": for DB drivers supporting bulk DML (for use with the queueData(), flush(), and discard() methods), the number of rows inserted/upserted at once (default: 1000, only used when "unstable_input" is False) and bulk inserts are supported in the table object; see InboundTableMapper Bulk Insert API for more information; note that this is also applied when upserting despite the name"rowcode": a per-row Closures or Call References for batch inserts/upserts; this must take a single hash argument and will be called for every row after a bulk insert/upsert; the hash argument representing the row inserted/upserted will also contain any output values for inserts if applicable (such as sequence values inserted from "sequence" field options for the given column)"upsert": if True then data will be upserted instead of inserted (default is to insert)"upsert_strategy": see Upsert Strategy Codes for possible values for the upsert strategy; if this option is present, then "upsert" is also assumed to be True; if not present but "upsert" is True, then SqlUtil::AbstractTable::UpsertAuto is assumed

|

- Exceptions

-

| MAP-ERROR | the map hash has a logical error (ex: "trunc" key given without "maxlen", invalid map key); insert-only options used with the "upsert" option |

| TABLE-ERROR | the table includes a column using an unknown native data type |

- See also

- setRowCode()

| *hash TableMapper::InboundTableMapper::queueData |

( |

hash< auto > |

rec, |

|

|

*hash< auto > |

crec |

|

) |

| |

inserts/upserts a row (or a set of rows, in case a hash of lists is passed) into the block buffer based on a mapped input record; the block buffer is flushed to the DB if the buffer size reaches the limit defined by the "insert_block" option; does not commit the transaction

- Example:

{

on_success table_mapper.flush();

on_error table_mapper.discard();

while (*hash h = stmt.fetchColumns(1000)) {

table_mapper.queueData(h);

}

}

{

const Map1 = (

"num": ("a"),

"str": ("b"),

);

Table table(someDataSource, "some_table");

InboundTableMapper table_mapper(table, DataMap. ("insert_block" : 3));

on_error table_mapper.discard();

list data = (("a" : 1, "b" : "bar"), ("a" : 2, "b" : "foo"));

list mapped_data = (map table_mapper.queueData($1), data.iterator()) ?? ();

list flushed_data = table_mapper.flush() ?? ();

mapped_data += flushed_data;

}

Data is only inserted/upserted if the block buffer size reaches the limit defined by the "insert_block" option, in which case this method returns all the data inserted/upserted. In case the mapped data is only inserted into the cache, no value is returned.

- Parameters

-

| rec | the input record or record set in case a hash of lists is passed |

| crec | an optional simple hash of data to be added to each input row before mapping |

- Returns

- if batch data was inserted then a hash (columns) of lists (row data) of all data inserted and potentially returned (in case of sequences) from the database server is returned; if constant mappings are used with batch data, then they are returned as single values assigned to the hash keys; always returns a batch (hash of lists)

- Note

- make sure to call flush() before committing the transaction or discard() before rolling back the transaction or destroying the object when using this method

- flush() or discard() needs to be executed for each mapper used in the block when using multiple mappers whereas the DB transaction needs to be committed or rolled back once per datasource

- this method and batched inserts/upserts in general cannot be used when the

"unstable_input" option is given in the constructor

- if the

"insert_block" option is set to 1, then this method simply calls insertRow(); however please note that in this case the return value is a hash of single value lists corresponding to a batch data insert

- if an error occurs flushing data, the count is reset by calling Mapper::resetCount()

- using a hash of lists in rec; note that this provides very high performance with SQL drivers that support Bulk DML

- in case a hash of empty lists is passed, Qore::NOTHING is returned

- 'crec' does not affect the number of output lines; in particular, if 'rec' is a batch with

N rows of a column C and 'crec = ("C" : "mystring")' then the output will be as if there was 'N' rows with C = "mystring" on the input.

- on mappers with "insert_block > 1" (i.e. also the underlying DB must allow for bulk operations), it is not allowed to use both single-record insertions (like insertRow()) and bulk operations (queueData()) in one transaction. Mixing insertRow() with queueData() leads to mismatch of Oracle DB types raising corresponding exceptions depending on particular record types (e.g. "ORA-01722: invalid number").

- See also

-

- Exceptions

-

| MAPPER-BATCH-ERROR | this exception is thrown if this method is called when the "unstable_input" option was given in the constructor |

| MISSING-INPUT | a field marked mandatory is missing |

| STRING-TOO-LONG | a field value exceeds the maximum value and the 'trunc' key is not set |

| INVALID-NUMBER | the field is marked as numeric but the input value contains non-numeric data |

inserts/upserts a set of rows (from an iterator that returns hashes as values where each hash value represents an input record) into the block buffer based on a mapped input record; the block buffer is flushed to the DB if the buffer size reaches the limit defined by the "insert_block" option; does not commit the transaction

- Example:

on_success table_mapper.commit();

on_error table_mapper.rollback();

{

on_success table_mapper.flush();

on_error table_mapper.discard();

table_mapper.queueData(data.iterator());

}

Data is only inserted/upserted if the block buffer size reaches the limit defined by the "insert_block" option, in which case this method returns all the data inserted/upserted. In case the mapped data is only inserted into the cache, no value is returned.

- Parameters

-

| iter | iterator over the record set (list of hashes) |

| crec | an optional simple hash of data to be added to each input row before mapping |

- Returns

- if batch data was inserted then a hash (columns) of lists (row data) of all data inserted and potentially returned (in case of sequences) from the database server is returned; if constant mappings are used with batch data, then they are returned as single values assigned to the hash keys

- Note

- make sure to call flush() before committing the transaction or discard() before rolling back the transaction or destroying the object when using this method

- flush() or discard() needs to be executed for each mapper used in the block when using multiple mappers whereas the DB transaction needs to be committed or rolled back once per datasource

- this method and batched inserts/upserts in general cannot be used when the

"unstable_input" option is given in the constructor

- if the

"insert_block" option is set to 1, then this method simply calls insertRow() to insert the data, nevertheless it still returns bulk output

- if an error occurs flushing data, the count is reset by calling Mapper::resetCount()

- on mappers with "insert_block > 1" (i.e. also the underlying DB must allow for bulk operations), it is not allowed to use both single-record insertions (like insertRow()) and bulk operations (queueData()) in one transaction. Mixing insertRow() with queueData() leads to mismatch of Oracle DB types raising corresponding exceptions depending on particular record types (e.g. "ORA-01722: invalid number").

- See also

-

- Exceptions

-

| MAPPER-BATCH-ERROR | this exception is thrown if this method is called when the "unstable_input" option was given in the constructor |

| MISSING-INPUT | a field marked mandatory is missing |

| STRING-TOO-LONG | a field value exceeds the maximum value and the 'trunc' key is not set |

| INVALID-NUMBER | the field is marked as numeric but the input value contains non-numeric data |

| *hash TableMapper::InboundTableMapper::queueData |

( |

list |

l, |

|

|

*hash |

crec |

|

) |

| |

inserts/upserts a set of rows (list of hashes representing input records) into the block buffer based on a mapped input record; the block buffer is flushed to the DB if the buffer size reaches the limit defined by the "insert_block" option; does not commit the transaction

- Example:

on_success table_mapper.commit();

on_error table_mapper.rollback();

{

on_success table_mapper.flush();

on_error table_mapper.discard();

table_mapper.queueData(data.iterator());

}

Data is only inserted/upserted if the block buffer size reaches the limit defined by the "insert_block" option, in which case this method returns all the data inserted/upserted. In case the mapped data is only inserted into the cache, no value is returned.

- Parameters

-

| l | a list of hashes representing the input records |

| crec | an optional simple hash of data to be added to each row |

- Returns

- if batch data was inserted/upserted then a hash (columns) of lists (row data) of all data inserted/upserted and potentially returned (in case of sequences) from the database server is returned

- Note

- make sure to call flush() before committing the transaction or discard() before rolling back the transaction or destroying the object when using this method

- flush() or discard() needs to be executed for each mapper used in the block when using multiple mappers whereas the DB transaction needs to be committed or rolled back once per datasource

- this method and batched inserts/upserts in general cannot be used when the

"unstable_input" option is given in the constructor

- if the

"insert_block" option is set to 1, then this method simply calls insertRow()

- if an error occurs flushing data, the count is reset by calling Mapper::resetCount()

- See also

-

- Exceptions

-

| MAPPER-BATCH-ERROR | this exception is thrown if this method is called when the "unstable_input" option was given in the constructor |

| MISSING-INPUT | a field marked mandatory is missing |

| STRING-TOO-LONG | a field value exceeds the maximum value and the 'trunc' key is not set |

| INVALID-NUMBER | the field is marked as numeric but the input value contains non-numeric data |